[ad_1]

Google has announced its latest multi-modal AI model, which immediately sparked the industry. However, the demo published on YouTube was fake. The company’s parent company, Alphabet, rose 5.3% after announcing Gemini AI as it promises to make a significant leap in AI by outperforming the Microsoft-backed OpenAI GPT 4 AI model. Compared to Google, Microsoft had seen a significant jump of 50% by 2023, with Google raising over $500 billion.

A lot happened after the launch, with mixed reactions. This means that Google has less confidence in Gemini. Not only this, but the integrity of the hands-on video with the AI chatbot also went viral. Despite the claim that it is the most powerful and versatile, many people and reports suggest that there is something wrong with what Google has shared with us and with the reality. There’s a fine line between them, so let’s look at why Google’s AI demo was fake.

What is Google’s Gemini AI model?

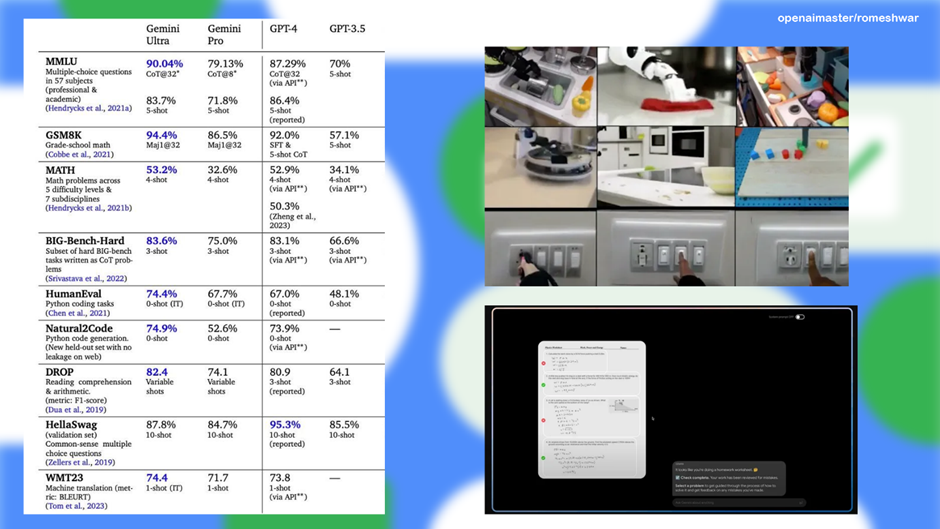

Developed by Google’s DeepMind’s Brain division, they built it from scratch instead of training individual AI models for images, audio and text. Instead, they built it as a fundamental model for handling different media formats. Google’s Gemini is close to the Meta AI LLaMA 2 and GPT 3.5 and even outperforms them in specific tasks. Apple has yet to showcase any of its generative AI for the iPhone. The company claims that Gemini AI has outperformed other AIs, including OpenAI’s GPT-4 model, in 30 of the industry’s 32 most popular benchmarks, including Massive Multitask Language Understanding (MMLU).

In addition, the company has released Gemini in three different sizes: Ultra, Pro and Neo. The Neo is among the lightest and is intended to be used locally on devices for small tasks such as intelligent answers and audio summarization without internet. The Pro is intended to perform general tasks with better accuracy and faster response. The Ultra is designed to handle more complex tasks, mainly for businesses and developers, and will debut early next year.

The company will gradually update the underlying AI model for Bard, Google Search Genative Experience, Chrome, Ads, YouTube, Help Me Write and Android, expanding to more in the coming months. With all these resources, Google has also improved its infrastructure. They have developed the TPUs, Cloud TPU v5P, for efficient and better performance, mainly aimed at training AI models, and they are composed of pods with 8960 chips.

Google recognition

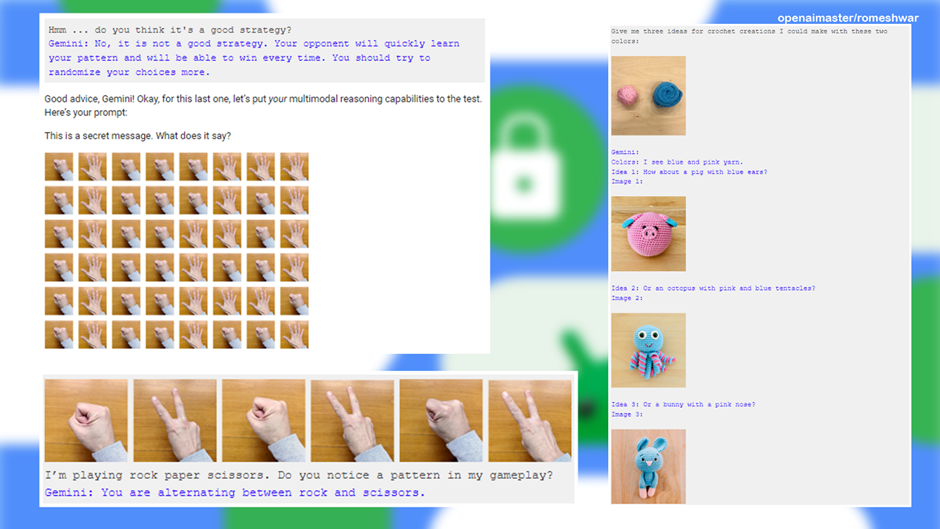

Bloomberg published a report on the Google Gemini Demo Video. In that report, a Google spokesperson clarified the disclaimer in the video: “For the purpose of this demo, latency has been reduced and the Gemini output has been shortened for brevity.” The voice in the demo read a human-made prompt they created for Gemini and was given images for reference.

The demo was amazing though. Google quickly launched sales campaigns to convince OpenAI’s enterprise customers to switch to Google. Not to mention, an impressive demo can only take you so far. Like Duplex, Google demonstrated some creepy technology before it went nowhere.

All the immersive stuff in the demo showcases the different capabilities and reasoning skills of Google DeepMind AI Labs, which have been developed over the years. Many of the features are not unique and can be replaced by ChatGPT Plus. Messing up such details points to a broader marketing effort. Furthermore, the launch of Gemini also raised concerns about the high cost of operating and generating AI models. Additionally, the $80 million increase impacted the development of AI in response to Gemini’s capabilities.

Google uses manipulated video for the Gemini demo

Watch the following demo:

As the demo video suggested, it went so smoothly. But the video wasn’t shot in real time or using voice commands. Plus, it wasn’t even a video as the demo suggested. It was all done using still image frames from the video, which were sent to Gemini via text as a prompt. Gemini would then respond. Through multiple concepts, only the perfect one is chosen.

The AI reads the human-made clues sent to Gemini. It can outperform the GPT-4 on elementary school math, multiple-choice exams, and other benchmarks. Scenarios such as high school science, professional law, and moral scenarios are used to test these things. The current AI race is almost entirely defined by such capabilities.

Google’s Gemini AI model is undoubtedly one of the most capable and can perform better in understanding, summarizing, reasoning, coding and planning tasks. Moreover, companies like OpenAI already filed a trademark application for GPT-5 in July, which is still pending. Also, it is said that GPT-3 will be discontinued at the end of this year, making GPT-4 the base version, while GPT-4 Turbo would be for ChatGPT Plus, which offers better accuracy, efficiency and a powerful payment option. for.

Although Alphabet was one of the first companies to use AI, Microsoft maintains its leading position in the AI industry. Meanwhile, experts and users are concerned about the technology’s potential promises and dangers. Not only this, the AI debates also raise concerns about the technology eventually eclipsing human intelligence, which could lead to the loss of millions of jobs. Furthermore, this could lead to more divisive behavior, such as spreading disinformation or inciting the development of nuclear weapons.