[ad_1]

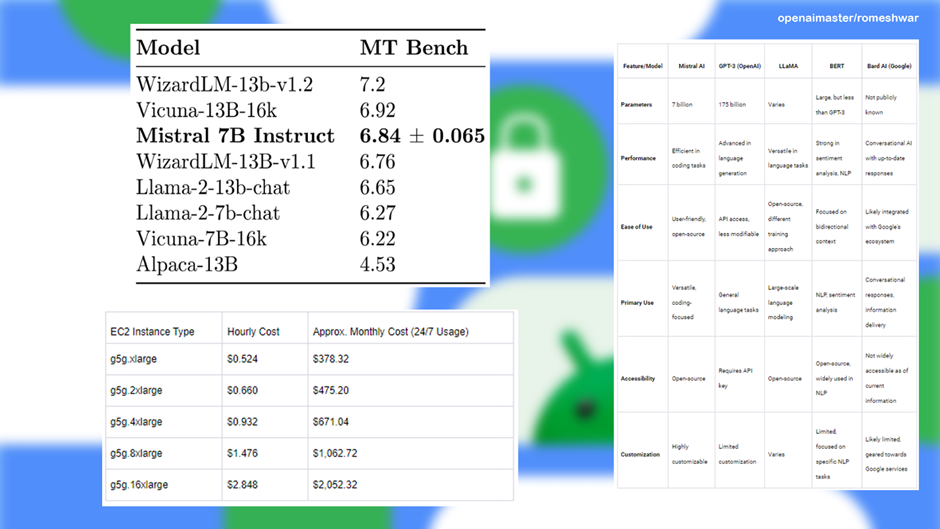

Mistral AI has officially announced the Mistral 7B and Mistral 7B Instruct Foundation models based on the transformer model, which offer low latency and can handle long sequences. They can use 8000 tokens to generate content, making them one of the best performing basic models. They offer capabilities similar to those of other LLMs, while reflecting the expertise present in Meta AI and Google DeepMind. The company was founded by former AI researchers from Meta and Google to compete with other AI models and benefit humans.

The company aims to achieve borderline AI capabilities and build developer- and enterprise-focused products in the coming months. Such a high-quality model has the potential to change the industry, revolutionize it and increase productivity. Mistral AI has been officially released to compete with OpenAI and Google. It is striking that the Mistral 7B model is available to everyone without restrictions. The Mistral AI Platform is available in beta and is expected to go live in early 2024. It is the first major language model that is available to everyone for free. It also claims to outperform other comparable AI models of its size without any limitations.

Mistral AI model

This is an open-source AI model, the Large Language Model (LLM) MoE 8x7B, and has been previously released through various channels, including the 13.4 GB torrent, for superior performance. Open source software allows anyone to copy, modify and reuse Mistral AI. It delivers opportunities more efficiently compared to other LLM models. It is also better on all parameters than the LLaMA 2 13B model, making it as big as the Mistral 7B. For comparison, GPT-4 has 7 billion parameters compared to 1800 billion.

The 7B Foundation Model has 7 billion parameters and is highly customizable, supporting English text and code generation capabilities. The startup’s technology will enable companies to integrate and deploy chatbots, search engines, online tutors and other AI-powered products. Mistral AI has this business model, just like other companies exploring AI models to generate revenue.

Under the hood, running on 24 GB of vRAM, the model processes an input sequence and the output predicts the next words in series. You can start using Mistral AI on Hugging Face, but you can also deploy your own AI via API access. Mistral AI has created a GitHub repository and a Discord channel for troubleshooting and collaboration. Furthermore, Mistral AI has stated that it will make the necessary changes and adjustments to comply with the EU AI law.

Furthermore, Mistral AI’s Foundation Model is based on customized training, tuning and data processing. It will benefit sustainability and efficiency by reducing training time, costs, energy consumption and impacting AI. Moreover, Mistral LLM would also be a scaled-down GPT-4, which appears to be a MoE composed of 8 7B experts, each with 111B parameters and 55B shared attention parameters.

Benefits of Mistral AI 7B v0.1

There are several benefits to using the Mistral AI model, which offers superior adaptability and allows for customization to specific tasks and user needs.

- You can use it for text summarization, classification, text completion and code completion.

- Answering big context questions

- Coding

- Mathematics and reasoning

- Tidy.

- It is capable of creating human-like prose and codes in seconds.

- It processes and generates text faster than more extensive proprietary solutions.

Mistral AI’s performance is resource friendly. Training the first-generation AI model, which is more efficient, twice as often in cheaper implementations. Since it is open-source, you can refine it according to your preferences. The AI model can be used for research and commercial purposes. This also proves that the small model can outperform our comparable model, such as the LLAMA 7B, on all benchmarks.

Mistral AI Funding

The company consists of data scientists, software engineers and machine learning experts from DeepMind, Meta AI and Hugging Face.

The company is valued at $2 billion in funding, with significant investments from players such as Andreessen Horowitz, BNP Paribas, General Catalyst, La Famiglia, Eric Schmidt, New Wave, Motier Ventures, Sofina, NVIDIA Corp. and Salesforce. The ambitious roadmap calls for the development of boundary models that focus on summarization and responding to user questions.

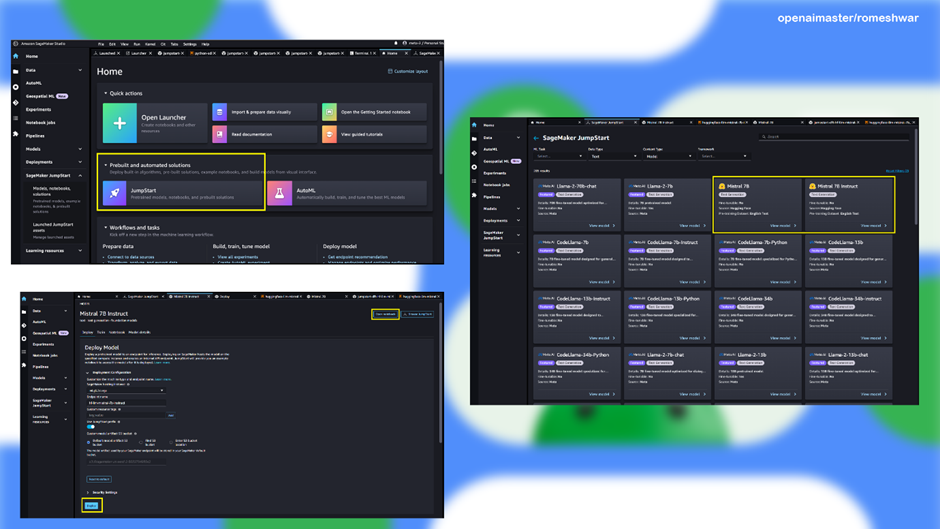

Mistral AI is now available on Amazon SageMaker and Google Cloud Vertex AI.

You can deploy the Mistral 7B model on AWS to access algorithms and models through SageMaker Studio. There you have a web-based visual interface for monitoring and development, including training and implementation of ML models. Amazon SageMaker provides network isolated environments and customizable models, and runs through the SageMaker Python SDK. The model is accessible for download and Docker Image, which makes the entire process more manageable to set up a completion API via a cloud provider with NVIDIA GPUs.

You can also add the Mistral AI model to Google Cloud’s Vertex AI for a flexible AI solution. Google Cloud aims to become the best platform for the OSS AI community and ecosystem. It is released under the Apache 2.0 license, which allows developers to deploy it to the cloud and gaming GPUs. This AI model is designed to outperform competing models in all benchmarks and can be deployed in the cloud or on gaming GPUs. Despite arguments that AI, including these technologies, could be used to spread disinformation and other harmful material,