[ad_1]

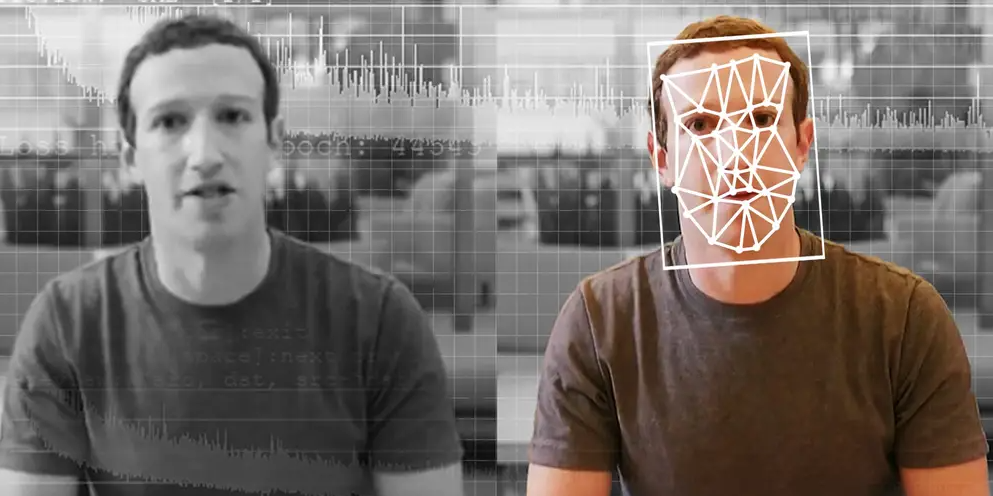

Deepfakes, a portmanteau of “deep learning” and “fake,” refer to media that takes a person in an existing image, audio recording, or video and replaces it with the likeness of someone else using artificial intelligence. These synthesized media, known as deepfakes, have rapidly developed in sophistication, making the AI-manipulated files harder to detect as fake. While deepfakes offer new creative possibilities, their potential for abuse raises complex questions around ethics, privacy and disinformation.

How does deepfake AI technology work?

Deepfakes use a machine learning technique called generative adversarial networks (GANs) to create synthetic media that can pass as authentic. GANs use two competing neural networks:

- Generator – Creates synthetic images, video and audio that mimic real data.

- Discriminator – Tries to detect which media is fake and provides feedback to the generator.

Through an iterative training process, the generator model becomes proficient at creating highly realistic media that can fool both AI and humans.

To swap a person’s face or voice, the generator is fed with extensive data about the target person. It learns to synthesize their facial expressions, speech patterns and other qualities. The resulting deepfakes show the targeted person saying or doing things they never actually said or did.

See more: Is deepfake AI illegal?

Evolution of deepfake AI technology

| Year | Milestone |

| 2017 | First deepfake algorithm published, quickly used to create non-consensual porn videos on Reddit. |

| 2018 | Improved deepfake algorithms are emerging, making synthesis more accessible. |

| 2019 | The Zao app demonstrates the ease with which you can create deepfake face swaps using selfies, and is going viral in China. |

| 2020 | Social media videos of politicians manipulated using deepfakes. |

| 2021 | AI startup Dessa releases a deepfake text-to-speech speech cloning service. |

| 2022 | Meta (Facebook) creates a universal voice model that can mimic any voice. |

In just a few years, deepfakes have quickly evolved from obvious problems to seamless, photorealistic results as AI capabilities improve. This makes detection increasingly difficult.

Potential applications of deepfake technology

Deepfakes are used in many legitimate ways in media, entertainment, education and the arts. Possible applications include:

- Visual effects for movies and TV shows

- Interactive educational simulations

- New forms of synthetic social media influencers

- Next generation chatbots with personalized voices

- Augmented reality filters and lenses

- Virtual product sampling and digital fashion

- Animation of game characters and cinematic scenes

- Artistic multimedia installations

However, deepfakes also enable unethical uses, such as non-consensual intimate images, fraud, fake news and hoaxes that appear authentic. As technology continues to spread, managing risk alongside innovation is critical.

See more: How to stop AI from creating deepfakes

Risks and challenges of deepfakes

Potential dangers and societal consequences of malicious use of deepfake technology include:

- Revenge Porn – Faces of victims swapped without consent in intimate media. It is disproportionately used to harass women.

- Fake news – Public figures showed themselves saying or doing things they never did to spread disinformation. Undermines trust.

- Financial fraud – AI voices used to spoof identities and bypass voice authentication systems to steal money.

- Hoaxes – Fabricated images of events constructed to manipulate public sentiment.

- Reputational damage – Individuals or groups involved in compromising deepfake scenarios.

- Affect operations – Governments or political groups use deepfakes to influence elections and sow discord.

As deepfake generation becomes more accessible, the potential for harm grows.

Emerging deepfake detection techniques

Although AI synthesis is constantly improving, deepfakes still contain subtle clues that reveal their artificial origins, including:

- Unnatural blinking, mouth movements or facial expressions

- Strange skin textures, lighting, or warping artifacts

- Mismatched audio and video quality

- Unnatural voice tones and cadence

Researchers are developing deep learning tools aimed at identifying these artifacts at the pixel and audio level. Startups are also creating blockchain-based digital media authentication techniques.

However, deepfake generation can surpass detection. Ultimately, education around critical thinking and media literacy is crucial to empowering the public.

Steps to manage deepfake risks

- Industry standards – Social networks and media platforms need consistent policies for dealing with deepfake content, focused on transparency and consent.

- Government regulation – Laws that address the generation of deepfake pornography, fraud and hoaxes that cause public or personal harm. However, overregulation threatens to limit innovation.

- Academic research – Advancing deepfake detection techniques and a better understanding of how synthesized media influences viewers.

- Media literacy programs – Teach audiences to think critically about the authenticity of online media and consider its motivations.

- Transparent labelling – Clear watermarks that reveal media as AI-generated while preserving creative use.

A multi-part strategy involving stakeholders from across the technology sector, government and the public is needed to maximize opportunities while mitigating risks.

The future of AI-generated media

While deepfakes pose challenges, the fundamental AI synthesis techniques that power them represent a creative frontier. As algorithms improve, we may see:

- Fully animated game characters based on actors’ performances

- Ultra-realistic VR avatars that capture identity and emotion

- Next generation voice assistants with personalized and expressive speech

- Immersive film and TV VR experiences built with deepfake actors

- AI presenters and synthetic news anchors customized to your preferences

- AI-powered content creation platforms that allow anyone to create polished videos

Deepfakes and synthetic media will enable new means of expression. Managing risk without limiting innovation requires nuance and collaboration among all stakeholders.

Conclusion

Deepfakes use powerful AI techniques such as GANs to generate highly realistic fake media, enabling new creative possibilities but also the potential for harm. With deepfake capabilities rapidly developing, managing risk through education, regulation, transparency and advanced detection will be critical. While deepfake technology is inherently dual-use, it ultimately represents the growing democratization of intelligent tools, allowing anyone to become a creator. Synthetic media will fundamentally transform the way we produce and consume entertainment, news, education and art in the coming decades.

🌟Do you have burning questions about DeepFake? Do you need some extra help with AI tools or something else?

💡 Feel free to send an email to Govind, our expert at OpenAIMaster. Send your questions to support@openaimaster.com and Govind will be happy to help you!